Table of contents

TDD anti-patterns - episode 3 - the nitpicker, the secret catcher, the dodger and the Loudmouth - with code examples in javascript, kotlin and php

The content here is under the Attribution 4.0 International (CC BY 4.0) license

This is a follow up on a series of posts around TDD anti-patterns. In the previous episode we covered the mockery, the inspector, the generous leftovers and the local hero, those are part of 22 anti-patterns listed by James Carr.

Do you prefer this content in video format? The video is available on demand in livestorm.

In this blog post we focus on four more anti-patterns: The nitpicker, The secret catcher, The dodger, and The Loudmouth. Each addresses a specific aspect of code that makes testing harder. These anti-patterns are commonly found in those new to Test-Driven Development (Beck, 2000), especially in those lacking experience writing test-driven applications.

Related to Episode 2: The Secret Catcher extends The Generous Leftovers—both involve hidden failures. The Loudmouth relates to The Local Hero—both create environment-specific test behavior.

The nitpicker

The nitpicker, as the definition goes, is noted in web applications where the need to assert the output is focused on an entire object rather than the specific property needed. This is common for JSON structures as depicted in the first example.

The following code1 asserts that an application has been deleted. In this context, an application is a regular entry in the database with the label “application”.

Note that this example in PHP is used to assert the exact output from the HTTP request, nothing more, nothing less.

<?php

public function testDeleteApplication()

{

$response = $this->postApplication();

$this->assertFalse($response->error);

$this->delete('api/application/' . $response->data)

->assertExactJson([ // is this needed?

'data' => (string) $response->data,

'error' => false

]);

}

In this sense, this test is fragile for a specific reason: if we add another property to the response, it will fail complaining that the JSON has changed. For removing properties, such failure would be helpful. On the other hand, adding a new property should not cause a failure.

The “fix” would be to replace the “exact” idea in this assertion to be less strict, such as the following:

<?php

public function testDeleteApplication()

{

$response = $this->postApplication();

$this->assertFalse($response->error);

$this->delete('api/application/' . $response->data)

->assertJson([ // <!--- changing this assertion

'data' => (string) $response->data,

'error' => false

]);

}

The change here is to assert that the desired fragment is indeed in the output, no matter if there are other properties in the output, as long as the desired one is there. This simple change opens up the door to move away from the fragile test we had in the first place.

Another way to not face the nitpicker is to look for the properties you want to. The following code is from an open source project that handles the S3 signing process to access a resource in Amazon S3:

describe('#getSignedCookies()', function() {

it('should create cookies object', function(done) {

var result = CloudfrontUtil.getSignedCookies(

'http://foo.com', defaultParams);

expect(result).to.have.property('CloudFront-Policy');

expect(result).to.have.property('CloudFront-Signature');

expect(result).to.have.property('CloudFront-Key-Pair-Id');

done();

});

});

The code has three assertions to assert that it has the desired property instead of checking all at once regardless of the output.

Another example of how to approach such assertions is the code extracted from an open source project that aims to collect and process the four key metrics:

@Test

fun `should calculate CFR correctly by monthly and the time split works well ( cross a calendar month)`() {

val requestBody = """ { skipped code } """.trimIndent()

RestAssured

.given()

.contentType(ContentType.JSON)

.body(requestBody)

.post("/api/pipeline/metrics")

.then()

.statusCode(200)

.body("changeFailureRate.summary.value", equalTo(30.0F))

.body("changeFailureRate.summary.level", equalTo("MEDIUM"))

.body("changeFailureRate.details[0].value", equalTo("NaN"))

.body("changeFailureRate.details[1].value", equalTo("NaN"))

.body("changeFailureRate.details[2].value", equalTo(30.0F))

}

Once again, the RestAssured looks for property by property in the output instead of comparing everything in the output as depicted in the first example.

Testing frameworks usually offer such utility to help developers to test. For example, in the first example, Laravel uses the syntax assertJson/assertExactJson. The second example uses chai to depict how to assert specific properties in an object. Last but not least, RestAssured is the one used to depict how to deal with the nitpicker in the Kotlin ecosystem.

The nitpicker - root causes

- Overspecifying expectations in assertions beyond what behavior requires

- Lack of awareness about framework assertion utilities (partial matching vs. exact matching)

- Testing implementation details instead of behavior

The secret catcher

The secret catcher commonly appears across codebases and often results from time pressure to deliver features. The test is called “secret” because the expected exception isn’t obvious from the code. Instead of explicitly handling it, the test case ignores it. The secret catcher also relates to The Greedy Catcher, which we cover later.

The following example written in vuejs with apollo client is an attempt to depict such a scenario, where the method seems to be fine and does what it is supposed to do. In other words, it sends a mutation to the server to remove the payment type from the associated user, and in the end, it updates the UI to reflect that:

async removePaymentMethod(paymentMethodId: string) {

this.isSavingCard = true;

const { data } = await this.$apolloProvider.clients.defaultClient.mutate({

mutation: DetachPaymentMethodMutation,

variables: { input: { stripePaymentMethodId: paymentMethodId } },

});

if (this.selectedCreditCard === paymentMethodId) {

this.selectedCreditCard = null;

}

this.isSavingCard = false;

}

The JavaScript test is written using jest and the testing library. Here is a challenge for you: before going any further in the text, can you spot what is missing from the test case?

test('it handles error when removing credit card ', async () => {

const data = await Payment.asyncData(asyncDataContext);

data.paymentMethod = PaymentMethod.CREDIT_CARD;

const { getAllByText } = render(Payment, {

mocks,

data() {

return { ...data };

},

});

const [removeButton] = getAllByText(Remove');

await fireEvent.click(removeButton);

});

Let’s start with the missing assertion at the end of the test case. If you haven’t noticed, the last step that the test does is to wait for the click event, and that’s it. For a feature that removes a payment method from a user, asserting that a message is shown would be a good idea.

Besides that, the test case relies on a specific setup where the GraphQL mutation will always work. In other words, if the GraphQL mutation throws an exception in the production code, there is no sign of it being handled. In this scenario, the test case relies on jest to report the error if any.

The secret catcher - root causes

- Incomplete understanding of exception handling in testing frameworks

- Rushing to make tests pass without proper assertion

- Lack of test-first practice—assertions are afterthoughts instead of upfront specifications

The dodger

The dodger is, in my experience, the most common anti-pattern when learning TDD. Before examining code, let me explain why it occurs.

Writing test-first code means writing a failing test first, making it pass, then refactoring. While straightforward in principle, two questions commonly arise: “What should I test?” and “How small should each step be?”. Without clear answers, developers often fall into the dodger by testing implementation details rather than desired behavior.

Simple Example: Testing Implementation Details

Here’s a straightforward example that demonstrates the dodger:

// Production code

class User {

constructor(name, email) {

this.name = name;

this.email = email;

this._validated = false;

}

validate() {

if (!this.email.includes('@')) {

throw new Error('Invalid email');

}

this._validated = true;

}

isValid() {

return this._validated;

}

}

// THE DODGER: Testing implementation details (getters/setters) instead of behavior

test('user validation sets internal flag', () => {

const user = new User('John', 'john@example.com');

expect(user._validated).toBe(false); // Testing private property!

user.validate();

expect(user._validated).toBe(true); // Testing private property!

});

The problem: The test is tightly coupled to the implementation. If you refactor _validated to _isValid or store it differently, the test breaks even though the behavior (validation) is the same.

Better approach: Test the behavior, not the implementation

test('user validation accepts emails with @', () => {

const user = new User('John', 'john@example.com');

// Should not throw

expect(() => user.validate()).not.toThrow();

expect(user.isValid()).toBe(true); // Test public behavior

});

test('user validation rejects emails without @', () => {

const user = new User('John', 'invalid-email');

expect(() => user.validate()).toThrow('Invalid email');

});

This approach tests the desired behavior (validation rules) without depending on internal implementation details.

Complex Example: Multi-field Validation

The following more complex example demonstrates the dodger across multiple fields:

<?php

namespace Drupal\druki_author\Data;

use Drupal\Component\Utility\UrlHelper;

use Drupal\Core\Language\LanguageManager;

use Drupal\Core\Locale\CountryManager;

/**

* Provides author value object.

*/

final class Author {

/** skipped protected properties to fit code here */

/**

* Builds an instance from an array.

*

* @param string $id

* The author ID.

* @param array $values

* The author information.

*/

public static function createFromArray(string $id, array $values): self {

$instance = new self();

if (!\preg_match('/^[a-zA-Z0-9_-]{1,64}$/', $id)) {

throw new \InvalidArgumentException('Author ID contains not allowed characters, please fix it.');

}

$instance->id = $id;

if (!isset($values['name']) || !\is_array($values['name'])) {

throw new \InvalidArgumentException("The 'name' value is missing or incorrect.");

}

if (\array_diff(['given', 'family'], \array_keys($values['name']))) {

throw new \InvalidArgumentException("Author name should contains 'given' and 'family' values.");

}

$instance->nameGiven = $values['name']['given'];

$instance->nameFamily = $values['name']['family'];

if (!isset($values['country'])) {

throw new \InvalidArgumentException("Missing required value 'country'.");

}

$country_list = \array_keys(CountryManager::getStandardList());

if (!\in_array($values['country'], $country_list)) {

throw new \InvalidArgumentException('Country value is incorrect. It should be valid ISO 3166-1 alpha-2 value.');

}

$instance->country = $values['country'];

if (isset($values['org'])) {

if (!\is_array($values['org'])) {

throw new \InvalidArgumentException('Organization value should be an array.');

}

if (\array_diff(['name', 'unit'], \array_keys($values['org']))) {

throw new \InvalidArgumentException("Organization should contains 'name' and 'unit' values.");

}

$instance->orgName = $values['org']['name'];

$instance->orgUnit = $values['org']['unit'];

}

if (isset($values['homepage'])) {

if (!UrlHelper::isValid($values['homepage']) || !UrlHelper::isExternal($values['homepage'])) {

throw new \InvalidArgumentException('Homepage must be valid external URL.');

}

$instance->homepage = $values['homepage'];

}

if (isset($values['description'])) {

if (!\is_array($values['description'])) {

throw new \InvalidArgumentException('The description should be an array with descriptions keyed by a language code.');

}

$allowed_languages = \array_keys(LanguageManager::getStandardLanguageList());

$provided_languages = \array_keys($values['description']);

if (\array_diff($provided_languages, $allowed_languages)) {

throw new \InvalidArgumentException('The descriptions should be keyed by a valid language code.');

}

foreach ($values['description'] as $langcode => $description) {

if (!\is_string($description)) {

throw new \InvalidArgumentException('Description should be a string.');

}

$instance->description[$langcode] = $description;

}

}

if (isset($values['image'])) {

if (!\file_exists($values['image'])) {

throw new \InvalidArgumentException('The image URI is incorrect.');

}

$instance->image = $values['image'];

}

if (isset($values['identification'])) {

if (isset($values['identification']['email'])) {

if (!\is_array($values['identification']['email'])) {

throw new \InvalidArgumentException('Identification email should be an array.');

}

$instance->identification['email'] = $values['identification']['email'];

}

}

return $instance;

}

public function getId(): string {

return $this->id;

}

public function getNameFamily(): string {

return $this->nameFamily;

}

public function getNameGiven(): string {

return $this->nameGiven;

}

public function getCountry(): string {

return $this->country;

}

public function getOrgName(): ?string {

return $this->orgName;

}

public function getOrgUnit(): ?string {

return $this->orgUnit;

}

public function getHomepage(): ?string {

return $this->homepage;

}

public function getDescription(): array {

return $this->description;

}

public function getImage(): ?string {

return $this->image;

}

public function checksum(): string {

return \md5(\serialize($this));

}

public function getIdentification(?string $type = NULL): array {

if ($type) {

if (!isset($this->identification[$type])) {

return [];

}

return $this->identification[$type];

}

return $this->identification;

}

}

The goal here is to validate the Author object before creating it from an array. To be created, the given array should hold valid data, and if not, an exception will be thrown. Then, next up is the testing code:

<?php

/**

* Tests that objects works as expected.

*/

public function testObject(): void {

$author = Author::createFromArray($this->getSampleId(), $this->getSampleValues());

$this->assertEquals($this->getSampleId(), $author->getId());

$this->assertEquals($this->getSampleValues()['name']['given'], $author->getNameGiven());

$this->assertEquals($this->getSampleValues()['name']['family'], $author->getNameFamily());

$this->assertEquals($this->getSampleValues()['country'], $author->getCountry());

$this->assertEquals($this->getSampleValues()['org']['name'], $author->getOrgName());

$this->assertEquals($this->getSampleValues()['org']['unit'], $author->getOrgUnit());

$this->assertEquals($this->getSampleValues()['homepage'], $author->getHomepage());

$this->assertEquals($this->getSampleValues()['description'], $author->getDescription());

$this->assertEquals($this->getSampleValues()['image'], $author->getImage());

$this->assertEquals($this->getSampleValues()['identification'], $author->getIdentification());

$this->assertEquals($this->getSampleValues()['identification']['email'], $author->getIdentification('email'));

$this->assertEquals([], $author->getIdentification('not exist'));

$this->assertEquals($author->checksum(), $author->checksum());

}

The first thing that I noted when skimming through the code is that if I need to change how I get the author name (rename the method, for example), it is required to change the test code, even though the desired behavior hasn’t changed. The validation (the current behavior) is still required.

Another approach would be to rewrite the single test case by splitting it into multiple test cases, catching the desired exception if an undesired value is passed, then encapsulate it in a validator class to prevent the coupling between the test and production code.

The dodger - root causes

- Lack of test-first discipline—writing tests after implementation leads to testing implementation instead of behavior

- Unclear understanding of what constitutes a test’s responsibility (behavior over structure)

- No emphasis on refactoring without breaking tests

The Loudmouth

When developing, adding some traces to see if what the code is doing matches the developer’s understanding is something common. We can call that debugging, and it’s often used when a developer needs to understand a piece of code.

TDD practitioners argue that once TDD is practiced, no debugging tool is needed (Freeman & Pryce, 2009), be it a print statement or be it adding breakpoints into the code.

Therefore, what happens if you don’t have that much experience with TDD?

Often, the answer is a mix of both: debugging and using the tests to communicate with you. For example, the following code depicts a test code that can handle an error if it happens to receive an invalid JavaScript code. Keep in mind that the code is used to parse JavaScript code and act upon its result:

test.each([['function']])(

'should not bubble up the error when a invalid source code is provided',

(code) => {

const strategy = jest.fn();

const result = Reason(code, strategy);

expect(strategy).toHaveBeenCalledTimes(0);

expect(result).toBeFalsy();

}

);

The check is straightforward. It checks if the desired strategy wasn’t called as the code is an invalid JavaScript code, and also checks if the result from that was the boolean false. Let’s see now what the implementation of this test looks like:

const reason = function(code, strategy) {

try {

const ast = esprima.parseScript(code);

if (ast.body.length > 0) {

return strategy(ast);

}

} catch (error) {

console.warn(error); // < ---------- this is loud

return false;

}

};

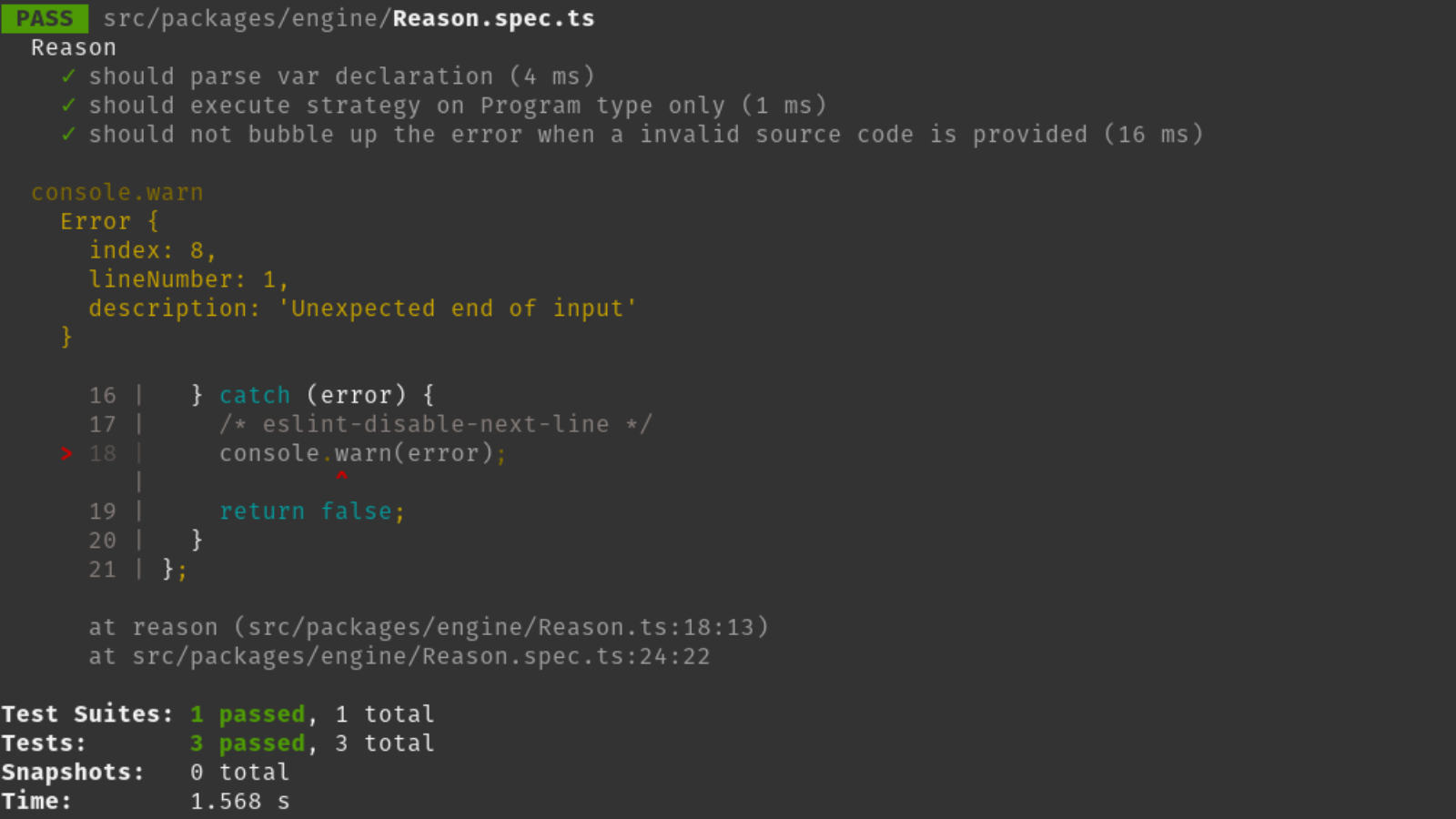

Ideally, working in a TDD fashion, the console.log used would be mocked from the start. As it would require a verification to when it was called and with which message. This first hint already points to an approach that is not test first. The following image depicts what the loudmouth causes: even though the tests are green, there is a warning message. Did the test pass? Did the change break something?

(Freeman & Pryce, 2009) gives an idea on why logging (such as this console.log) should be treated as a feature instead of a random log that is used for whatever reason.

The following snippet depicts a possible implementation mocking out the console.log and preventing the message from being displayed during test execution:

const originalConsole = globalThis.console;

beforeEach(() => {

globalThis.console = {

warn: jest.fn(),

error: jest.fn(),

log: jest.fn()

};

});

afterEach(() => {

globalThis.console = originalConsole;

});

With the mocked console, now it’s possible to assert the usage of it instead of printing the output while running the tests. The version without the loudmouth would be the following:

const originalConsole = globalThis.console;

beforeEach(() => {

globalThis.console = {

warn: jest.fn(),

error: jest.fn(),

log: jest.fn()

};

});

afterEach(() => {

globalThis.console = originalConsole;

});

test.each([['function']])(

'should not bubble up the error when a invalid source code is provided',

(code) => {

const strategy = jest.fn();

const result = Reason(code, strategy);

expect(strategy).toHaveBeenCalledTimes(0);

expect(result).toBeFalsy();

expect(globalThis.console.warn).toHaveBeenCalled(); // < -- did it warn?

}

);

The Loudmouth - root causes

- Not practicing test-first—developers add logging statements during debugging instead of assertions

- Confusing debugging with testing—print statements serve a purpose during development but should be removed or converted to assertions

- Insufficient mocking or isolation of external dependencies (like console)

Wrapping up

In this post, we went through four more anti-patterns that are related to developers that are starting with TDD. Those covered are also the least popular anti-patterns that came up in the survey: The secret catcher at 7th, The nitpicker, The dodger, and the loudmouth at 8th.

We saw that the secret catcher is tricky, and it seems to be testing what it is supposed to test, but at a close inspection, we spot the mishandling of exceptions. The nitpicker, on the other hand, points to fragile tests, in which the assertion used to test focuses on more things than it needs.

The dodger is the classic anti-pattern when starting with TDD, creating a one-to-one relationship between tests and production code. The Loudmouth can cause developers to question whether a passing test is truly green, as console output pollutes the test results during execution.

All in all, we reached a point where we covered more than 50% of the 22 anti-patterns listed by James Carr. These four added to our toolkit more information while test driving applications.

References

- Beck, K. (2000). TDD by example. Addison-Wesley Professional.

- Freeman, S., & Pryce, N. (2009). Growing object-oriented software, guided by tests. Pearson Education.

Appendix

Edit Feb 10, 2021 - Codurance talk

Presenting the tdd anti-patterns at Codurance talk.

Footnotes

Changelog

- Feb 15, 2026 - Grammar fixes and minor rephrasing for clarity

-

The code is an adaptation of a code from github and the original source code can be found at ↩