An introduction to software testing and learning resources

The content here is under the Attribution 4.0 International (CC BY 4.0) license

TLDR

- Software testing is essential for ensuring software quality and compatibility with various contexts and programming languages

- Verification and validation are crucial in software testing, ensuring that software meets specified requirements and customer needs

- Accurate documentation of software requirements is vital for generating correct tests and ensuring software does what it is intended to do

- Unit testing is a type of testing performed by the programmer during software code development

- TDD (Test-Driven Development) is a methodology where the programmer writes test code before the actual software code, aiming to improve feedback and reduce the gap between decision and feedback

A note before starting

Hello there, if you are here it is probably because you want to understand more what is software testing and its contexts, this is great because the first part of this series about software testing goes exactly in this direction. However, the content that follows does not aim at being an extensive guide, it is focused on a variety of sources that define software testing and what it is in the software development context.

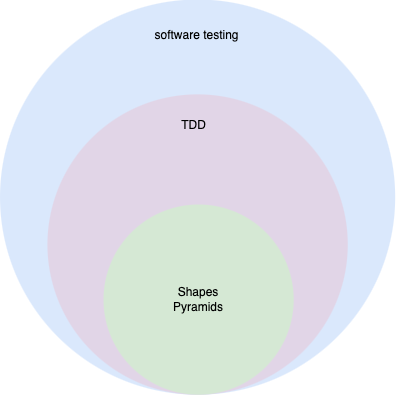

Software testing is a broader project in comparison with TDD, however, TDD is an area of knowledge in itself with different variations of the practice. The shapes are based on those two, in the software testing and TDD, as such they are embedded as the last level.

Introduction

Have you ever wondered what the practice of testing is? The practice of testing among us in our everyday actions. When we wake up, when we go to work or even the act of opening a door. Does that work? how long it will last? What happens if I try to pass without opening it?

If we take the place of a seller who wants to sell a door, customers will ask those questions and even more before buying it. This hypothetical scenario gives the ground to start narrowing down the context in which the testing activity takes place. Can we find the correct answer to those questions? Well, in the door example, we can look at what the definition of test is at the core of the word and see what we can do. According to dictionary.com test is described as follows:

- the means by which the presence, quality, or genuineness of anything is determined; a means of trial.

- the trial of the quality of something: to put to the test.

- a particular process or method for trying or assessing.

Rapidly we can adjust the definition to fit our door scenario. For example, in a hypothetical scenario, the first step would be to submit the product through a series of tests, such as the material resistance, durability, lifetime and so on. This would give us the numbers needed to understand the different characteristics of the door. However, it does not mean that this is tested in all scenarios possible and all cases in the door will be used. In other words, the essence of a test is simply to guarantee that a given element does what it is supposed to do, not more or less just what it needs to do.

If we start transitioning from our door example in software development the same holds. Software out there needs to go through a battery of tests to make sure that the software works in different environments, different programming language versions and even different devices, Wikipedia defines software testing as:

Software testing is an investigation conducted to provide stakeholders with information about the quality of the product or service under test. Software testing can also provide an objective, independent view of the software to allow the business to appreciate and understand the risks of software implementation. Test techniques include the process of executing a program or application with the intent of finding software bugs (errors or other defects), and to verify that the software product is fit for use.

Traditional software development teams often delegate the testing part to a QA (Quality Assurance) area, the purpose is to guarantee the quality of the software before submission to the client. This model was inspired by the waterfall model of development and, more recently, this has been shifting to shift-left the testing activities and incorporate them while the application is being developed rather than later.

More broadly, the definition of software testing according to Sommerville (Sommerville, 2016) is the task of showing that software does what it is intended to do, and discovering its defects before putting the software into use. Still, according to the same author, we can divide the objective testing software into two parts:

- Demonstrate to the client and developer that the software meets the specified requirements

- Expose some unwanted behavior

Through these two definitions, we have an overview of what we find in the process of software testing. The first topic aims to demonstrate to the client what is being built and exactly what was previously specified. The customer in this meaning does not necessarily need to be a person, as it often becomes an entity, such as a company.

The second topic has the opposite objective, exposing undesirable behavior and even possible failures given a given set of data. Behavior This must also be explicitly stated in the software specifications, even if it is undesirable. However, such an effort is aimed at providing predictability for customers as noted by Dijkstra: “Program testing can be used to show the presence of bugs, but never to show their absence” (Dijkstra, 1971) (More on that on Wikipedia), in other words, software testing is challenging, and exhaustive testing is impossible. Developers must make trade-offs in practice (Aniche Maurı́cio et al., 2019).

Verification and Validation

The task of verification and validation is a slightly more comprehensive view of the test software, but it is directly related to topic 1 of the previous section. Verification refers to the task that ensures that the software implements correctly the specifications. Validation refers to different tasks that ensure that the software is built according to the customer’s needs (Pressman, 2005).

According to Sommerville (Sommerville, 2016) and Pressman (Pressman, 2005), it is necessary to have expected and non-expected behaviors in the documentation of the system in question, however, the literature shows that in addition to testing, software requirements suffer from being a subject that requires an abstraction of the system on the part of those who exercise it, which can be costly for who does not have prior experience.

This leaves a gap not just in one aspect of the software development cycle software, but in two: testing and requirements. This leads to the hypothesis that one is directly connected to the other to deliver software that meets the customer’s expectations.

Requirements dictate what must be written by the programmer, what the results are, and what are the expectations expected by the customer. Then the testing phase uses this information to check what was developed, thus guaranteeing the veracity that the software is doing what it sets out to do. The accuracy of software requirements and essential for the creation and execution of the test, once the requirement is wrong, the test generated to verify will also be wrong.

When Do Verification and Validation Start and End?

According to Pezzè and Young, Verification and validation start as soon as we decide to build a software product, or even before (Pezzè & Young, 2008). This approach is also pushed forward with the agile inception from Behaviour Driven Development (BDD) and the agile ceremony three amigos.

Unit testing

Unit testing is the type of test that is created and executed by the programmer at the time in which the software code is written, this type of testing is the opposite of black box testing and white box which is often carried out by a professional dedicated to this task, and that it does not write the code in question. Sommerville (2017) defines unit testing as the process of testing different components of a program, such as methods and classes.

Unit testing (from the English Unit Test) became popular through the TDD methodology where the programmer starts writing the test code before the actual software code (JANZEN, 2005). However, unit testing from practitioners’ understanding does not require to be written first. In the episode “Would you board a plane safety-tested by GenAI?” of the StackOverflow podcast, practitioners make it explicit how they use Artificial intelligence to create test cases after the fact. Research is still needed to carry out the implications of such practice. Would that harm the understandability of the test code? Would it create tests with smells?

The various shapes

In this section, we will cover the different shapes that the community has created to group the different types of tests and how much of each to have in a test suite. Those shapes focus on the developer-facing side of the software development life cycle as depicted by the agile quadrant;

The bottom left guides the development but the upper side is also dependent on the developer but with less interaction. Those are set by a technical team but do not require a constant feedback loop to guide the code. The quadrant gives a shared idea of the focus that each type of test has from a business perspective. Next, we will explore the different flavors of shapes that are known in the industry for characterizing those tests.

Why so many shapes?

Each of the shapes is an attempt to give an idea of the distribution of each kind of test that a given application should have to cover different aspects of the code. The shapes make sense as a hard value is difficult to give because they are dependent on the context.

Google recommends: 70% unit tests, 20% integration tests, and 10% end-to-end tests

In 2015, Mike Wacker recommended the 70/20/10 approach for testing strategy. Meaning: 70% unit tests, 20% integration tests, and 10% end-to-end tests. In the blog post, the test pyramid is used. Further inspection might be needed to relate the other shapes available for testing.

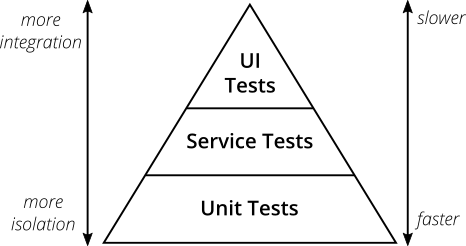

Test pyramid

So far in this introduction we haven’t classified or suggested any type of approach when it comes to the different types of tests. However, to tackle business needs, different types of tests are required. The test pyramid described in the book Succeeding with Agile by Mike Cohn (Cohn, 2010) and cited by Ham Vocke on Martin’s Fowler blog, suggests the following:

- item having a solid base of unit tests, which ideally run as fast as possible and provide fast feedback

- item in the middle we have integration tests that can be slower than unit tests but provide feedback if smaller pieces are working as they should

- item and last but not least the end-to-end tests (depicted as UI Tests), also references as tests that act as if they were a user (be it a human or another system/program)

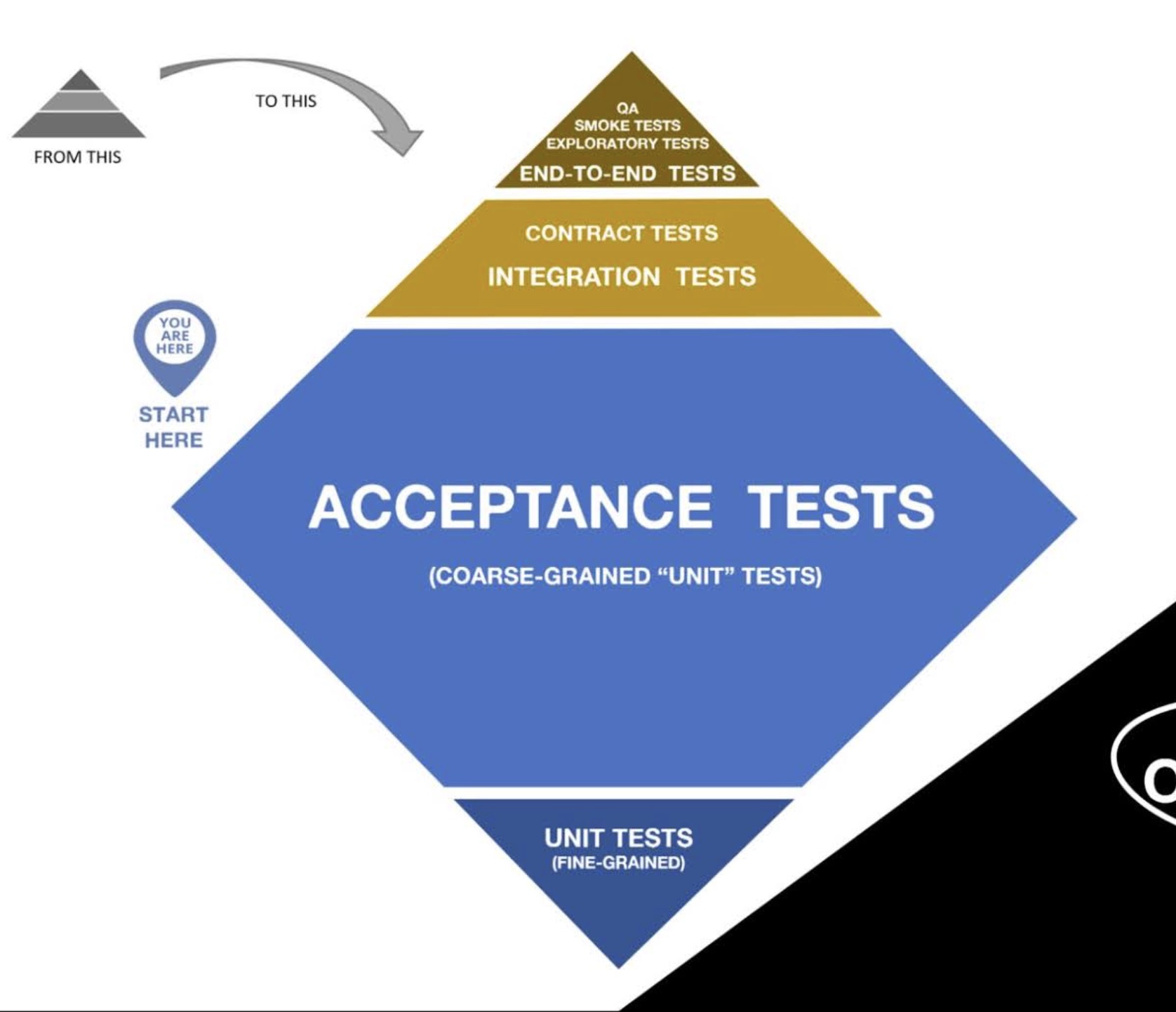

Test Trophy

Kent C Dodds in 2018 made a tweet that described another shape for testing strategy targeting javascript applications. I would narrow that to specifically frontend applications that use testing-library. In his blog post entitled “The Testing Trophy and Testing Classifications”, he elaborates on the reasons behind the need to write more integration tests. They are as follows:

- Code that is written for web apps mostly interacts with the browser

- Testing library supports this approach and was made with that philosophy

Since then, the testing library has also been mentioned in the technology radar and moved to adoption in May 2020, with such a move a wide range of developers started to adopt the library. As an author of different projects myself, I decided to move to the testing library in 2021 and even looked out for an easier migration from enzyme:

— Marabesi 💻🚀 (@MatheusMarabesi) September 22, 2021

From 2021 I have created projects that mainly use the testing library as a tool for testing in the frontend, some of them are:

- GitHub Analytics - Web app to see the contributors, commits and tech stack used in the GitHub repository

- JSON tool - it allows you to quickly format JSON content with a click of a button

- Text tool - it provides utilities that developers need to interact with text daily

These are a few open projects that I am allowed to share, in closed-source projects, most of them used the testing library.

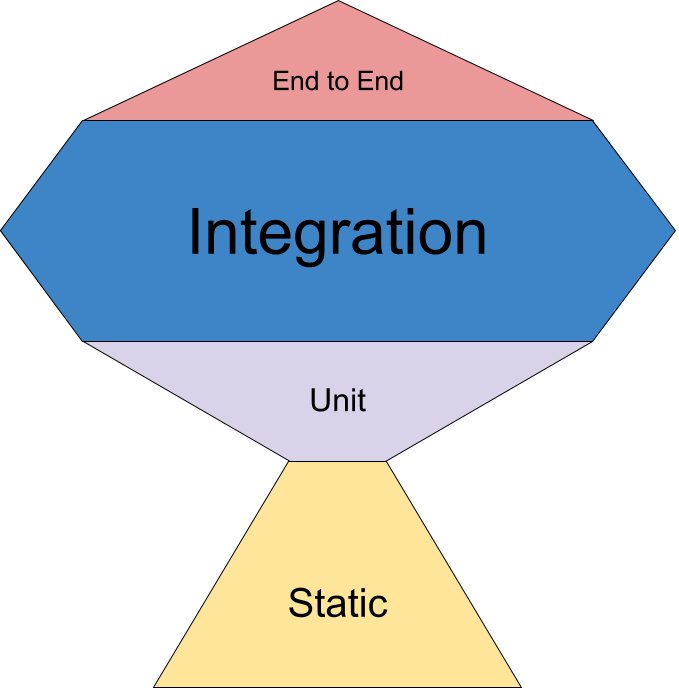

Diamond

Anti-patterns

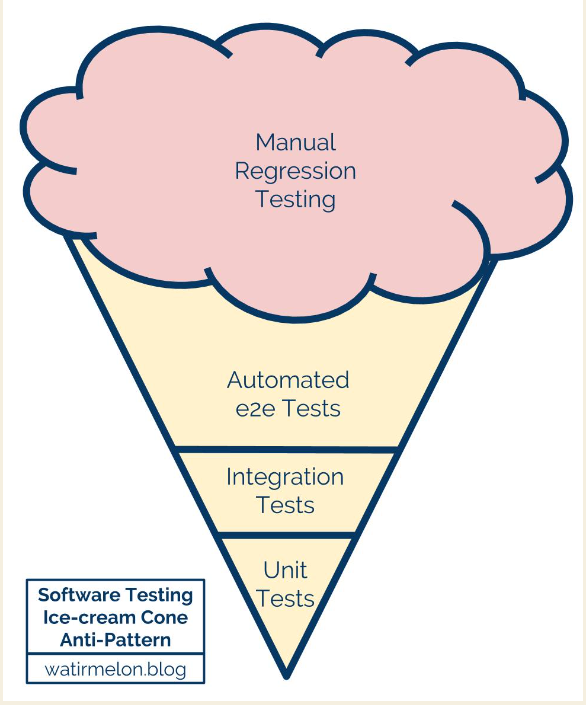

Despite the age of the aforementioned Test Pyramid, automated test suites are typically comprised of a greater number of slower tests rather than faster tests. In effect more integration and end-to-end tests than unit tests. This leads to the Ice Cream pattern instead of the Test Pyramid. The problem with having this Ice Cream test suite pattern instead of the Pyramid is the pain that practitioners feel in maintaining those tests whilst also maintaining the delivery rate.

Conclusion

Software testing is the base of quality assurance, guaranteeing that software functions across diverse contexts and programming languages. Verification and validation stand as pivotal pillars within this process, ensuring that software aligns with specified requirements and meets the expectations of its end-users.

The accuracy of software documentation serves as the blueprint for crafting precise tests and verifying that the software fulfills its intended purpose. Unit testing, an integral facet of this journey, sees programmers rigorously examining their code during its development phase, aiming to catch and rectify potential issues early on.

Embracing Test-Driven Development (TDD) further fortifies this approach, where programmers adopt a methodology of writing tests before the production code. This proactive stance not only enhances feedback loops but also fosters an efficient and reliable software development process.

Additional resources

- This playlist is a walk-through TDD, starting the basics and leveling up on each video. I tried to build a video sequence that makes sense and hopefully will have a chronological timeline that progresses bit by bit, from basics to more advanced concepts.

- The Quality Mindset with Holistic and Risk-Based Testing Strategies - Mark Winteringham

Check your knowledge - Software Testing

References

- Sommerville, I. (2016). Software Engineering 10th Edition (International Computer Science). Essex, UK: Pearson Education, 1–808.

- Dijkstra, E. W. (1971). Notes on structured programming.

- Aniche Maurı́cio, Hermans, F., & Van Deursen, A. (2019). Pragmatic software testing education. Proceedings of the 50th ACM Technical Symposium on Computer Science Education, 414–420.

- Pressman, R. S. (2005). Software engineering: a practitioner’s approach. Palgrave macmillan.

- Pezzè, M., & Young, M. (2008). Software testing and analysis: process, principles, and techniques. John Wiley & Sons.

- Cohn, M. (2010). Succeeding with agile: software development using Scrum. Pearson Education.