AI and TDD - A match that can work?

The content here is under the Attribution 4.0 International (CC BY 4.0) license

Test-Driven Development (TDD) is a software development approach that emphasizes writing tests before writing the actual code. This method not only ensures that the code meets the requirements but also helps in maintaining code quality in the form of refactoring. With the advent of AI, TDD can take advantage of it, making it with less friction to be adopted by developers.

A space dedicated for Test-Driven Development

This blog hosts a dedicated space for TDD-related content, where you can find posts that explore the concept of TDD, its benefits, and how it can be effectively implemented in software development workflows.

Before start, let's clarify what AI means for the purpose of this post.

Before start, let’s clarify what AI means for the purpose of this post. AI refers to the use of LLMs (Large Language Models) such as ChatGPT, Copilot, and others that can assist in generating code, tests, and even suggesting improvements to the codebase. Throughout this post, we will refer to AI as the use of LLMs in the context of TDD workflows, and whenever needed it is specified which LLM was used.

In this post, we will explore how AI can be integrated into TDD workflows, providing practical examples and insights into the benefits of this approach based on what research has shown.

Research

In the world of software development, TDD has been a game-changer. It encourages developers to think about the requirements and design of their code before implementation. However, writing tests can be time consuming (Mock et al., 2024; Alves et al., 2025). It is not only the advent of AI that looked at improving the TDD process, but also the automatically test case generation through a defined algorithm that generate the test cases and was is to detect more errors in comparison to the traditional TDD approach (Trinh & Truong, 2024).

In that sense, AI can assist in generating tests, suggesting improvements, and even automating parts of the TDD process. Research on the writing tests has shown that AI can significantly reduce the time spent on writing tests, however, an experienced developer is still needed to ensure that the tests are meaningful and cover the necessary scenarios (Mock et al., 2024). Not only that, generated code might not be secure (Shin, 2024), which reinforces the expert need.

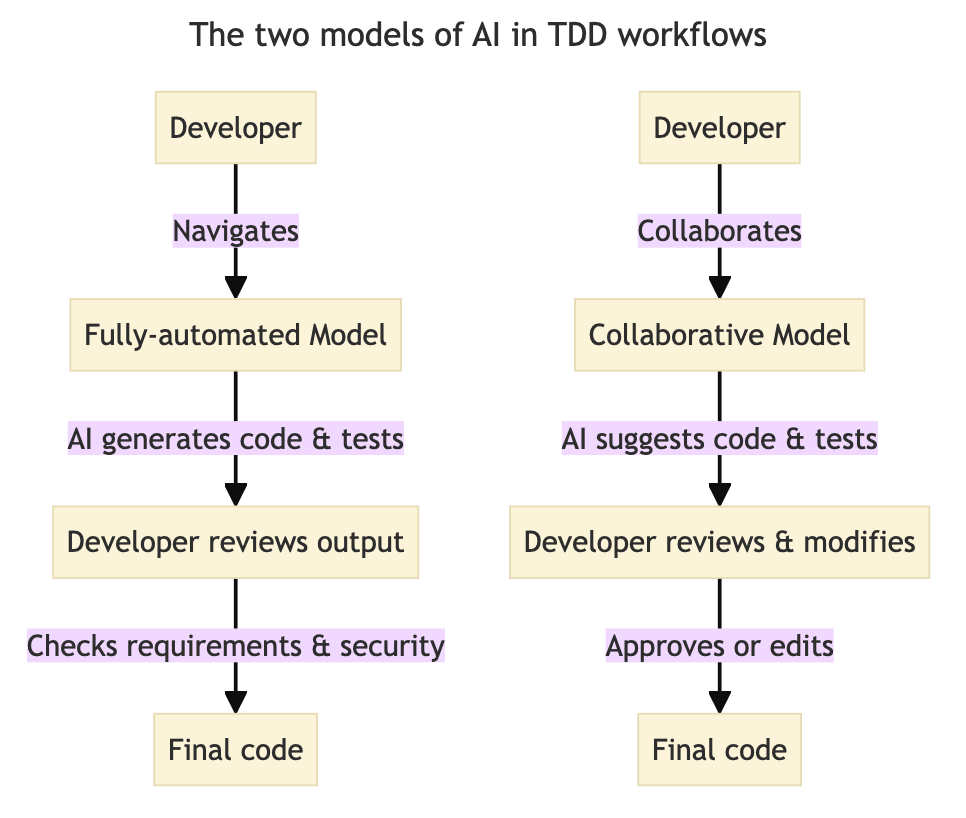

TDD has been put into a context of generative AI in attempt to improve the way developers write code, mostly target at incorporating AI inputs into the TDD steps (Mock et al., 2024; Shin, 2024). In a study by (Mock et al., 2024) it was used two different scenarios:

- Fully-automated (left) - the developers is a navigator, checking the AI-generated code and tests and making sure that they meet the requirements and are secure.

- Collaborative model (right) - the AI suggests tests and code based on the demand of the developer, the output is modified and then the flow repeats again.

The fully-automated model allows the AI to generate tests and code without human intervention, while the collaborative model involves the AI suggesting tests and code, which the developer can then review and modify. (Mock et al., 2024) used a python script to gather the metrics and integrate with ChatGPT API, in addition to that, the fully automated approach was the one that took less time to complete the task in their experiment.

(Piya & Sullivan, 2024) also explored the use of LLMs in TDD workflows, focusing on a flow that used the LLM to generate tests and code, which the developer could then review and modify, in addition to that, developers were able to use LLM to adjust the code. Their work used the chatGPT website directly.

(Shin, 2024) adopted a similar approach, and ChatGPT was the model that best scored in terms of requirements, Copilot and Gemini scored the same. In the next section, we will move on to discuss those approaches to combine TDD and LLMs.

Discussion

As interesting as it might sounds, fully-automated approach is yet a technique to be refined. The vibe-coding selling point is that AI can write code for you (research has shown that it might even generate code that is no more understandable than a human develop (Maes, 2025)), but it still requires a human to review and modify the code to ensure that it meets the requirements and is secure.

So far, in academic research, the focus has been on how AI can assist in TDD workflows, experiments have been under controlled environments. Despite positive results with students in brownfield projects (Shihab et al., 2025), research has a way to go in order to support practitioners out there, that need to deal with existing code bases that are a spaghetti and hard to maintain. From this point point of view, it makes even more urgent the need to use AI in creating the safety net that TDD does manually to support software evolution. Not only that, but also paying attention to the quality of the test code generated by AI, as it needs a human to ensure that the tests are meaningful and cover the necessary scenarios (Alves et al., 2025).

Professional software development has for long being a team sports activity, dating back from the agile boom (Zuill & Meadows, 2022). Research suggests that developers read more code than write it (Hermans, 2021). Rather than prioritizing speed alone, LLM development should focus on fostering understandability, maintainability, and a sustainable pace. Achieving a task quickly is a beneficial consequence, but quality should remain a primary concern. Unless the game rules change. Is it possible to generate things quickly and avoid maintenance at all?

AI in TDD Workflows in practice

In this section, we will delve into how AI can be effectively integrated into TDD workflows, providing practical examples and insights. Let’s explore how AI can be integrated into TDD workflows with practical examples, based on my experiences.

ReactJs

Last year, I experimented with using AI to generate tests for a ReactJs application. I used a combination of AI tools to generate tests for the components, ensuring that they were covered by unit tests.

A dedicated space for ReactJs

I share my learnings and experiences in a dedicated space for ReactJs, where you can find posts that explore and deeper content about ReactJs.

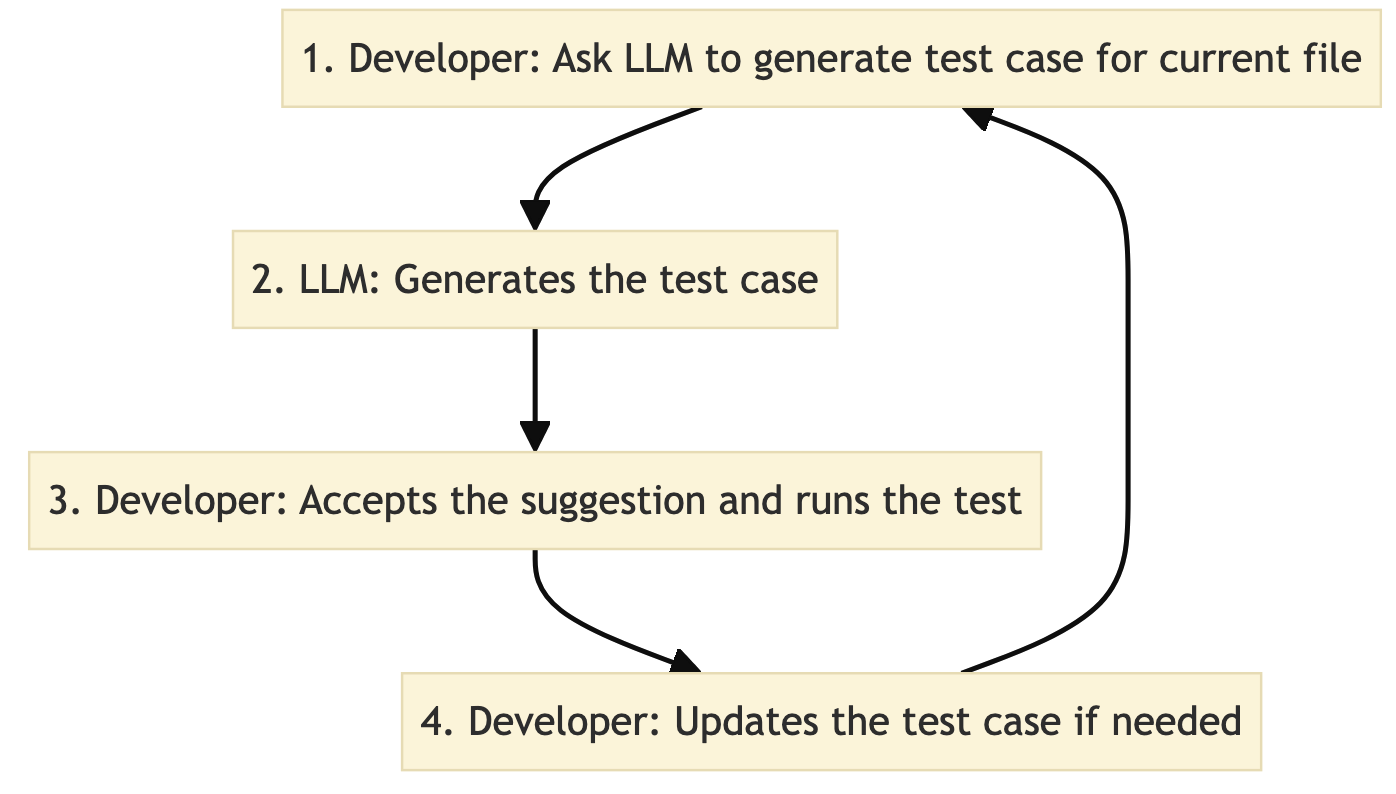

The AI (Copilot) was able to generate tests that covered the basic functionality of the components, but it still required manual intervention to ensure that the tests were meaningful, the most common aid needed were to identify the test-doubles that were needed to isolate the components from their dependencies. The flowchart that follows is similar to what (Mock et al., 2024) reported.

A few things to note about this flow:

- The developer is actively involved in the process, reviewing and modifying the AI-generated tests.

- The AI is used to generate the initial test cases, which can save time and effort. However, from practical experience, LLMs are not able to generate the test-doubles needed to isolate the components from their dependencies in specific cases.

- The developer is responsible for ensuring that the tests are meaningful, which is a crucial part of TDD.

In addition to that, prompts need to be specific to the context of the code being tested, and adhere so a certain pattern to make it reproducible. The prompt engineering approach might help in that. For example, making the LLM to use JSON as a response might limit hallucinations (Boonstra, 2024).

Test case quality

Note that from 1 to 4, the developer is actively involved in the process, reviewing and modifying the AI-generated code, however, the tackling of test quality is not explicitly mentioned in the flow. Research has shown that AI generates test cases with test-smells (Alves et al., 2025). This means that the AI-generated tests might not be as effective as manually written tests, and it is still important to ensure that the tests are maintainable.

Which leads to the point that the TDD workflow needs another step that is to refactor the test code at the same pace as the production code, if not at a even faster pace. Given that AI speeds up the process of writing tests, using part of this gain to payback makes sense.

Is it a match?

The once established TDD workflow, which is a cycle of writing a test, writing the code to pass the test, and then refactoring the code is now being upgraded to have a new step which is to use AI to generate the test and even the production code. The AI can assist in generating tests and suggesting improvements. The shift is becoming now that the red-green-refactor cycle has mutated to:

- red-green-AI-refactor

- red-AI-green-AI-refactor

LLMs can assist in generating tests, code and even refactoring with encouraging positive results, however, all of it with a human in the loop that can assess the output. Which goes back to the point that to first TDD (or to assess it) it needs to understand TDD, not only in theory but also in practice, which is a skill that takes time to develop.

References

- Mock, M., Melegati, J., & Russo, B. (2024). Generative AI for Test Driven Development: Preliminary Results. International Conference on Agile Software Development, 24–32.

- Alves, V., Bezerra, C., Machado, I., Rocha, L., Virgı́nio Tássio, & Silva, P. (2025). Quality Assessment of Python Tests Generated by Large Language Models. ArXiv Preprint ArXiv:2506.14297.

- Trinh, T.-B., & Truong, N.-T. (2024). Improving Test-Driven Development with Automated Test Case Generation from Use Case Specifications.

- Shin, W. (2024). A Study on Test-Driven Development Method with the Aid of Generative AI in Software Engineering. International Journal of Internet, Broadcasting and Communication, 16(4), 194–202.

- Piya, S., & Sullivan, A. (2024). LLM4TDD: Best practices for test driven development using large language models. Proceedings of the 1st International Workshop on Large Language Models for Code, 14–21.

- Maes, S. H. (2025). The gotchas of ai coding and vibe coding. it’s all about support and maintenance. OSF.

- Shihab, M. I. H., Hundhausen, C., Tariq, A., Haque, S., Qiao, Y., & Wise, B. M. (2025). The Effects of GitHub Copilot on Computing Students’ Programming Effectiveness, Efficiency, and Processes in Brownfield Programming Tasks. ICER.

- Zuill, W., & Meadows, K. (2022). Software Teaming: A Mob Programming, Whole-Team Approach. Pearson Addison-Wesley.

- Hermans, F. (2021). The Programmer’s Brain: What every programmer needs to know about cognition. Simon and Schuster.

- Boonstra, L. (2024). Prompt engineering (p. 60). Kaggle. https://www.gptaiflow.tech/assets/files/2025-01-18-pdf-1-TechAI-Goolge-whitepaper_Prompt%20Engineering_v4-af36dcc7a49bb7269a58b1c9b89a8ae1.pdf